10/1/2014 - today

GeoPix

TouchDesigner, Python, GLSL, HTML, CSS, JS

Traditionally pixels, dmx lights, and video walls all have different concepts of channels. A pixel of a video wall has a standard r/g/b structure common to most all consumer video hardware. However addressable pixels can be r/g/b, but they may also be g/r/b, or r/g/b/w. Further complicating the scene are dmx fixtures, which can have an arbitrary number of channels that do complex things, such as pan, tilt, rotate, or actuate, etc.

GeoPix solves for this problem by abstracting the concept of a channel, and allowing you to map any standard texture's r/g/b/a color channel to an internal named control channel. These control channels may drive a red or green diode, but may also drive a motor, focus a lens, or turn a servo, etc.

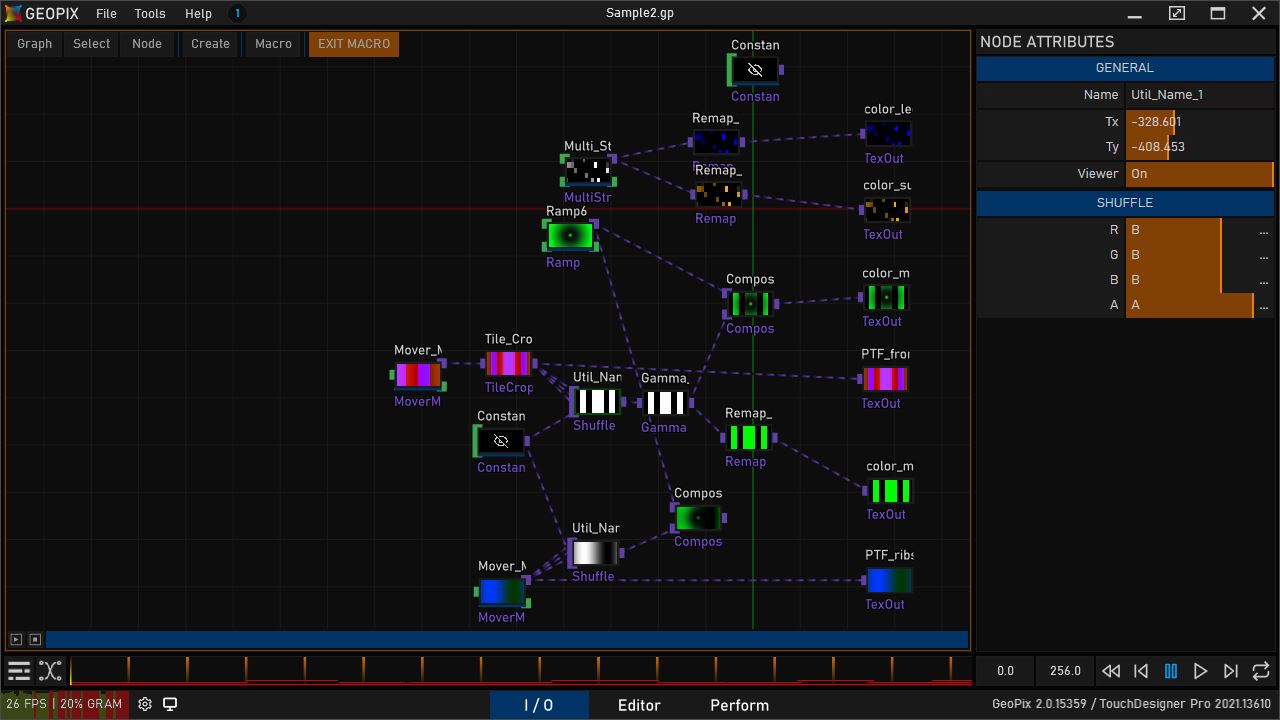

To make wrangling this many potential textures in real time feasible, I introduced a more content-oriented concept of a "Macro" into GeoPix. A Macro is essentially a node-based collection of real-time textures(videos, generative, etc.) which are assembled in such a way to deliver content to all the channels that need it synchronously. In effect, a Macro is a "look" that can apply lighting information to any or all mediums in a project.

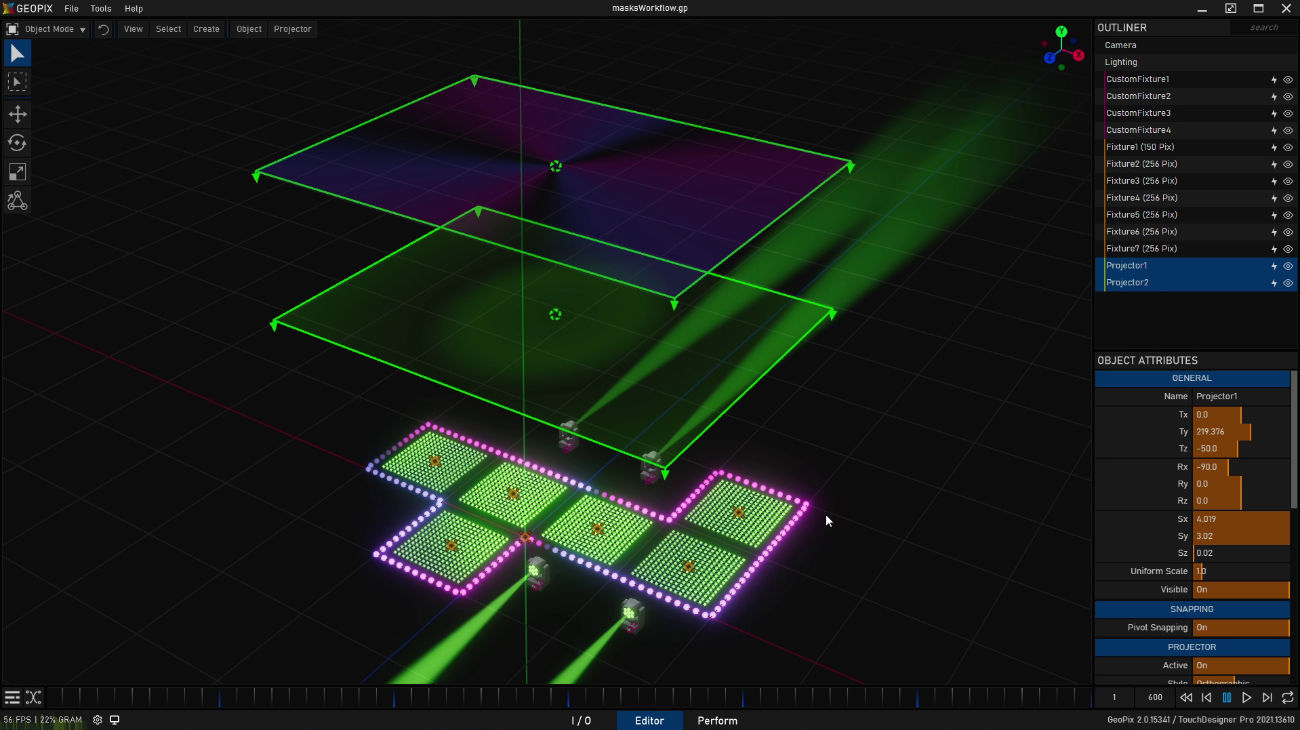

To facilitate the mapping of this 2D data coming out of the Macros onto the fixtures in the 3D world, I also introduced into GeoPix the concept of a digital "Projector" (not to be confused with the real life kind!) which essentially acts as the transformation matrix/conduit that allows a 3D point in world space to sample from the proper place and color channel of a Macro's 2D texture.

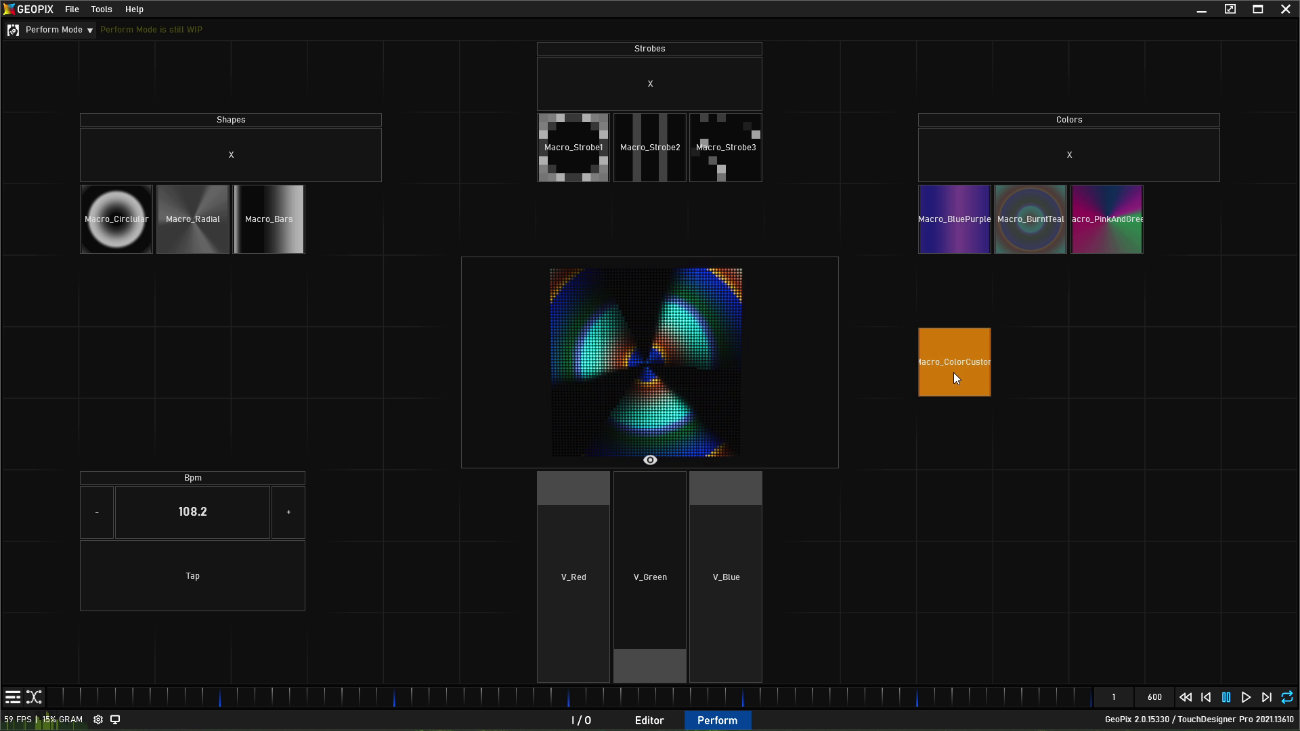

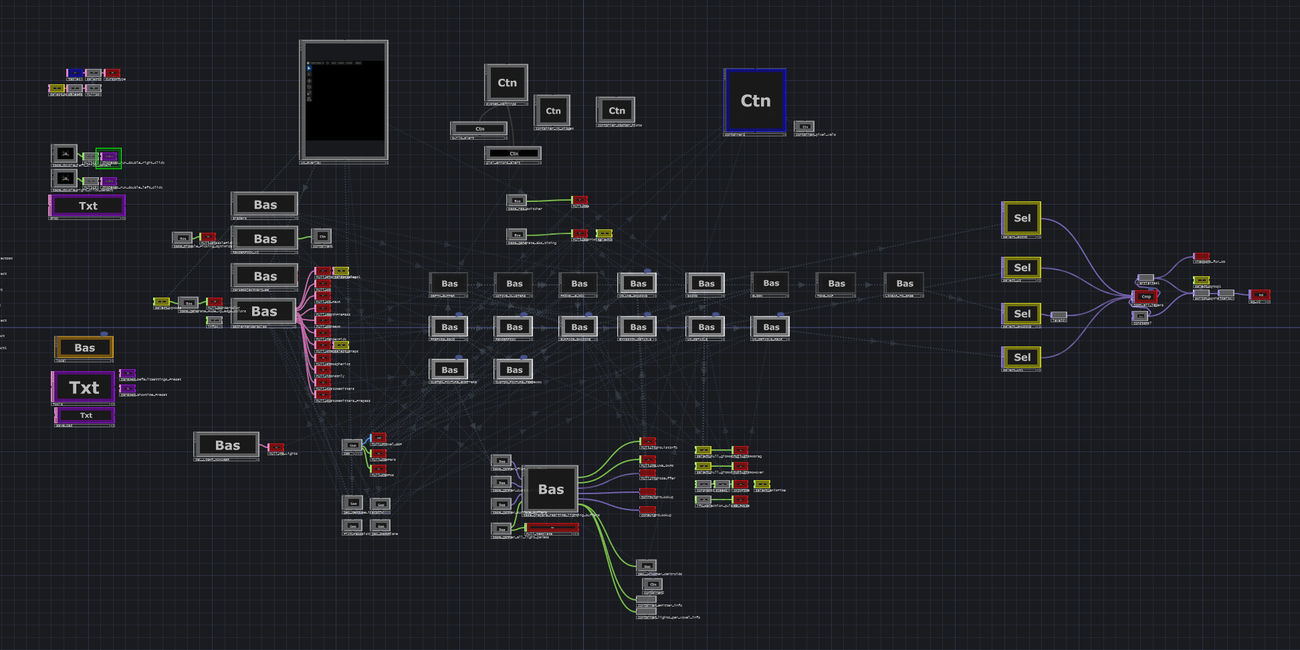

Lastly, to make GeoPix capable of being a performance tool, the "Perform Mode" features a canvas, that you can make a modular interface where you can build the interface and layout that suits your needs. Linking and grouping sliders and buttons to various Macros or just triggering them as preset looks as well.

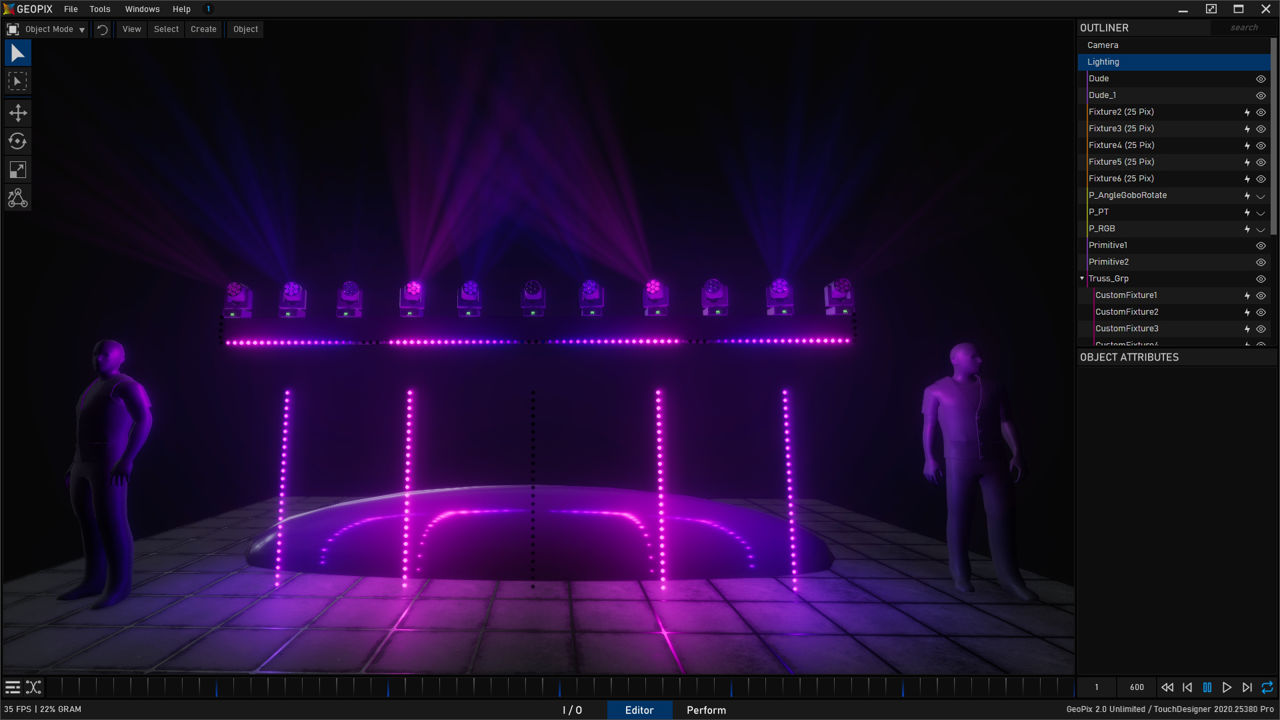

GeoPix not only has the ability to visualize a project in real time, it also can control the real lighting rig using the same project file. This allows, for instance, a VJ to practice their set on a virtual stage and switch to the real thing with minimal adjustment. Someone creating installation art may also approximate the impact that lighting will have on the venue, the walls, and generally how it will integrate with the space.

This of course requires some CAD files, or 3D assets of the venue or stage but creating these and to what extent usually comes down to need and time, or if the client needs to see a design before approving a budget, etc.

The main advantages here, are that users don't have to learn several potentially expensive software products that encompass different workflows, and can be difficult to integrate and manage during production. Usually this split happens between previz and lighting control, for example when a lighting console or desk is used, and it's output directed to a software that deals strictly in visualization.

In GeoPix, the Perform Tab is your built in "lighting console", if you choose to use it. Of course there are many times and reasons to use external software or hardware, and GeoPix wouldn't be complete with out an ample array of options for ingesting lighting control information as well.

Due to the broad variety of workflows GeoPix incorporates into one package, three unique approaches were taken in terms of UX.

The primary view, the "Editor" is a full fledged 3D viewport, with camera controls, transform tools, and selection/interaction depending on context, object selection, etc. I was largely inspired by various 3D software packages I use regularly, in particular Blender.

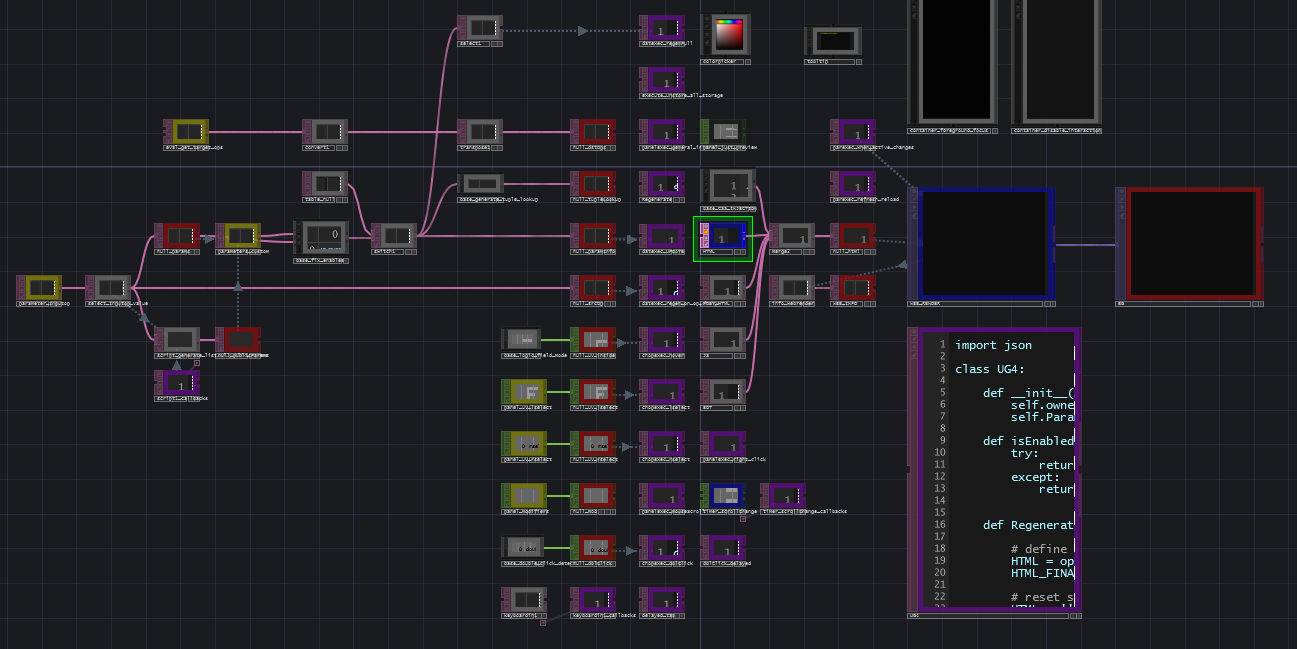

The I/O graph, is the place where content is assembled into Macros, through a node based interface. The graph is a 2d work space, so controls and navigation are much simpler.

Lastly, the Perform canvas sports a grid based modular landscape which users can populate with their own controls, in a layout that works for their needs. The Perform canvas is a simple part of the software from a UX perspective, but gets more complicated due to it's ability to be mapped or driven by MIDI, OSC, and various other control protocols.

Outside of the over arching three primary UX schemes employed, I also had the need for a common and feature rich vertical column style UI that would allow users to adjust dozens or hundreds of parameters quickly and easily. I developed UberGui over several years because of the need for a very fast system in GeoPix. UberGui is also open source, and easy to integrate into existing TouchDesigner projects. It uses HTML, CSS, and JS to handle the bi directional interaction and feedback efficiently.

One of the more complex elements in GeoPix to develop which took many months to get right, was the implementation of a JSON based "Custom Fixture" description that could be easy enough to create and modify through a GUI tool but abstract enough to handle rigging / creating / visualizing just about anything the user could want to design.

I envisioned the typical scenario as the user designing a previz/control profile for lights they owned, so that they could then create a project with those profiles.

The Custom Fixture Editor shown above in time-lapse form, shows how the tool can be used to create a profile for a real life DMX light, to a high degree of accuracy.

The critical constraint that made this so challenging was the necessity for this (like all other real-time structures in GeoPix) to be GPU accelerated, and tax the CPU as little as possible.

Moving vertices on the GPU is nothing new, but I had to devise a way to animate certain groups of vertices hierarchically, and also control some vertex attributes of the mesh such as PBR emission attribute, etc. in a targeted way.

There was no way this would be efficient with a massively complex branching shader, so instead what I opted to do was write a python script that generated a custom vertex and fragment shader for each fixture profile (JSON) that hard coded all the complex conditions into a simpler, flatter body of code.

These shaders would reference the final DMX texture buffer, and use these 0-255 ranged values to drive any and all described fixture parameters efficiently, with out ever downloading data back from the GPU again.

To my surprise, with some additional culling optimizations it was possible to render several hundred moving lights with volumetrics at reasonable real-time rates and quality levels.

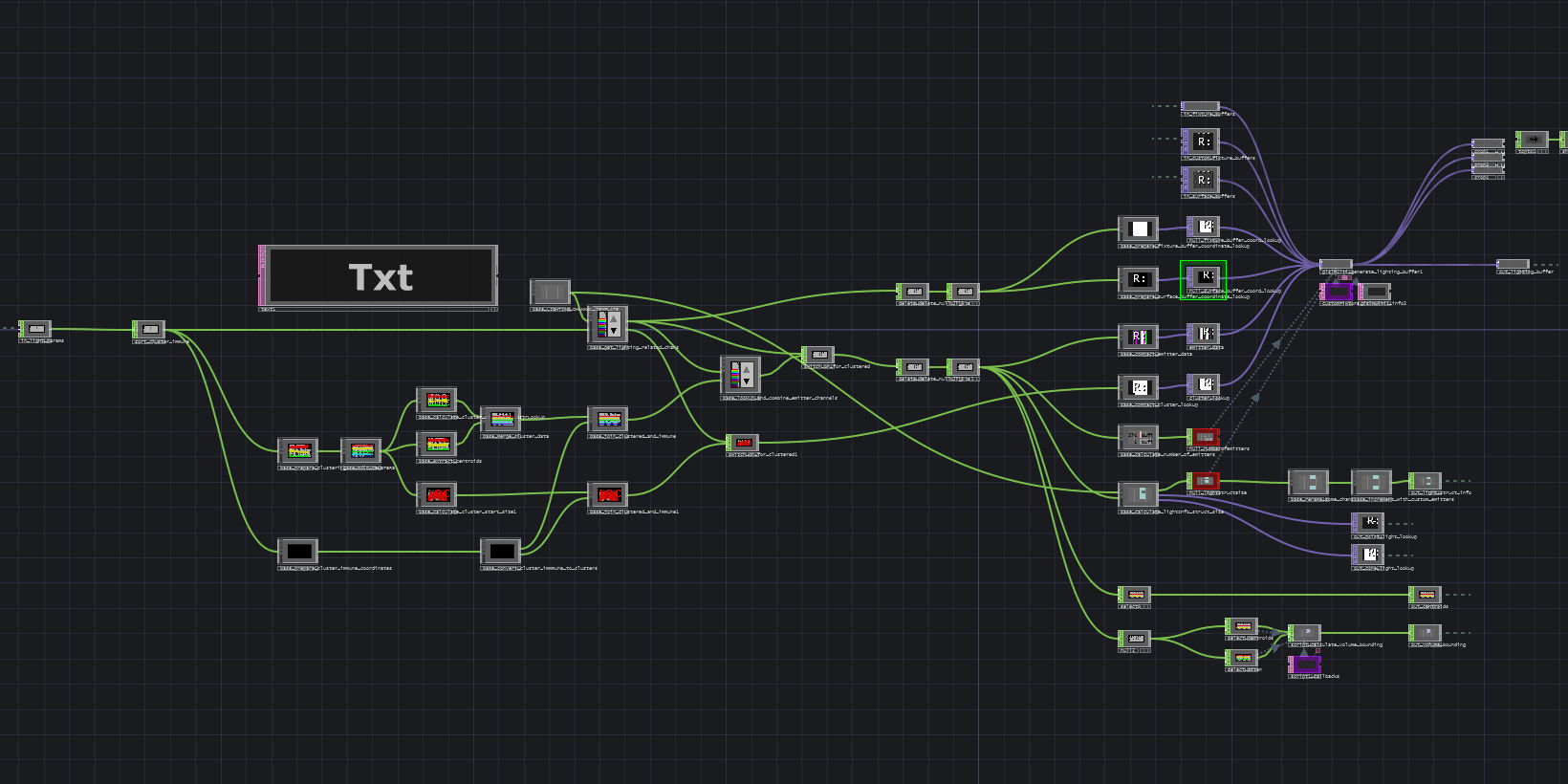

Back in the 0.9 days, a lot of the backend was made up up CPU driven data structures. This was fine for 0.9 honestly, as most users myself included were not going past 4-5k LEDs (back then, LEDs were all you could control).

However, GeoPix at that time had one foot in the GPU world and one in the CPU world for it's real-time content to LED pipeline, and it made adding features, scaling numbers, or adding more complex features difficult.

For GeoPix 1.x, I bit the bullet and decided to make the move to convert all of the real-time backend to GPU structures, and as much of the other larger static data to textures too, where it made sense. I was invited to give a talk at the 2019 TouchDesigner Summit on several topics, this being one of them.

A lot of the highly scalable number crunching as well as volumetric effects now operate purely on the GPU, increasing the baseline requirements to run GeoPix, but making it far more scalable.

To achieve showing hundreds, and sometimes thousands of surface illuminating volumetric cone and point lights, nearly half a year of R&D went into researching techniques for optimization, and culling like clustered shading, as well as spatial static clustering, and advanced cone light culling to make performance for a reasonable number of lights possible.

A theme that recurs constantly in software development that I think many are familiar with - is the notion that if I want to make a feature more than a prototype, significantly more time and cleverness always has to be invested in optimizing it over the initial implementation. Oh, and don't forget the bugs! There are always plenty of them.

Below, you can see how the emitters "reflect" in the PBR shader's BRDF reflection as well as in the volumetric medium. This happens cohesively, with several culling algorithms making sure pixels don't render any more lights than they actually need to.

When I say GeoPix is built in TouchDesigner, I mean that the core environment, and the 140,000+ nodes that make up much of the back and front end are part of a programming environment that essentially acts as a very extensive class of libraries and functions for manipulating data in a variety of visual ways. This makes it very easy to break large and complicated problems into smaller ones, and track down the root of issues as they come up.

TouchDesigner networks can honestly be considered their own artform, as every programmer and designer alike lays them out differently, and organizes things in different ways.

GeoPix has a growing community of users who have done some really amazing work throughout the years, ranging from Christmas light show visualization, to concert lighting and art installations at Burning Man.

Ranging from Discord to Facebook, and Patreon, users can interact with each other, get help when they need it, and support the project. There is also a growing collection of tutorials and guides available through GitHub/YouTube.

Below you can find a few projects that have been driven by GeoPix through out the years.